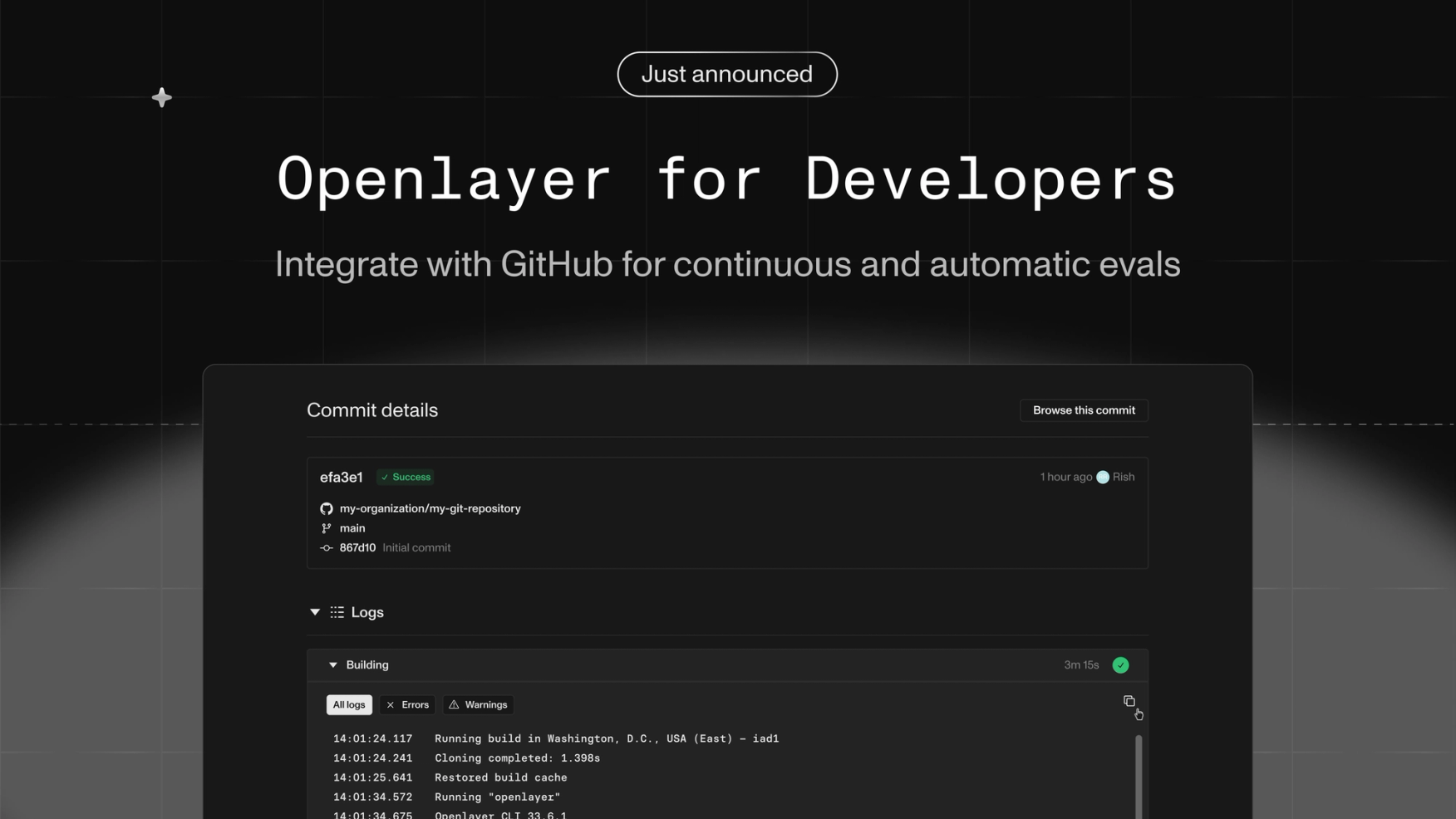

Pausing tests, checks for duplicate keys, and new integrations

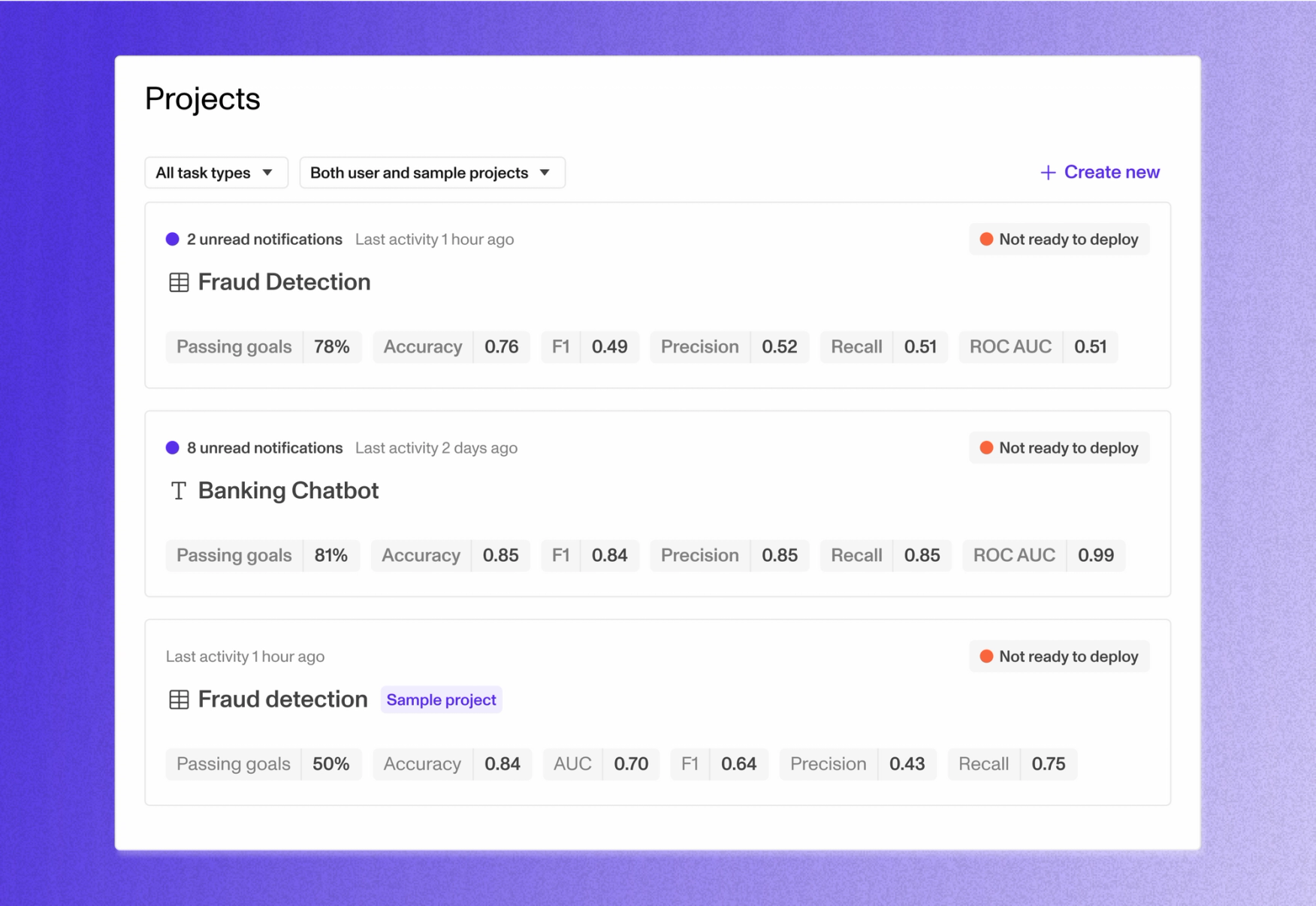

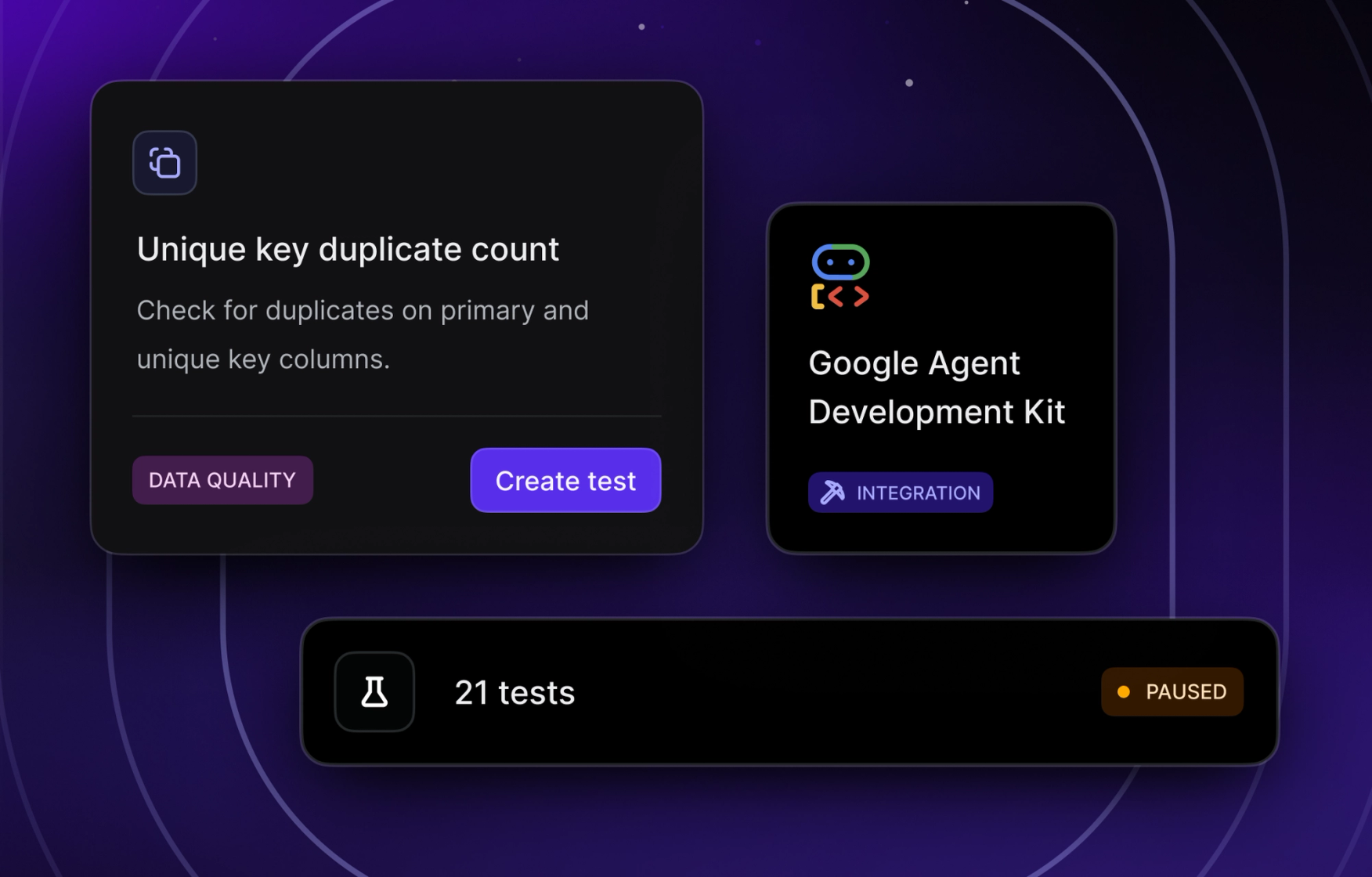

This month, we shipped a range of improvements across Openlayer, including new integrations, tests, and developer features. We’re also now available on the AWS, Azure, and Google Cloud marketplaces, and we’ve added support for evaluating multi-agent systems built with Google’s Agent Development Kit. Read on for the full list of updates and enhancements.

Features

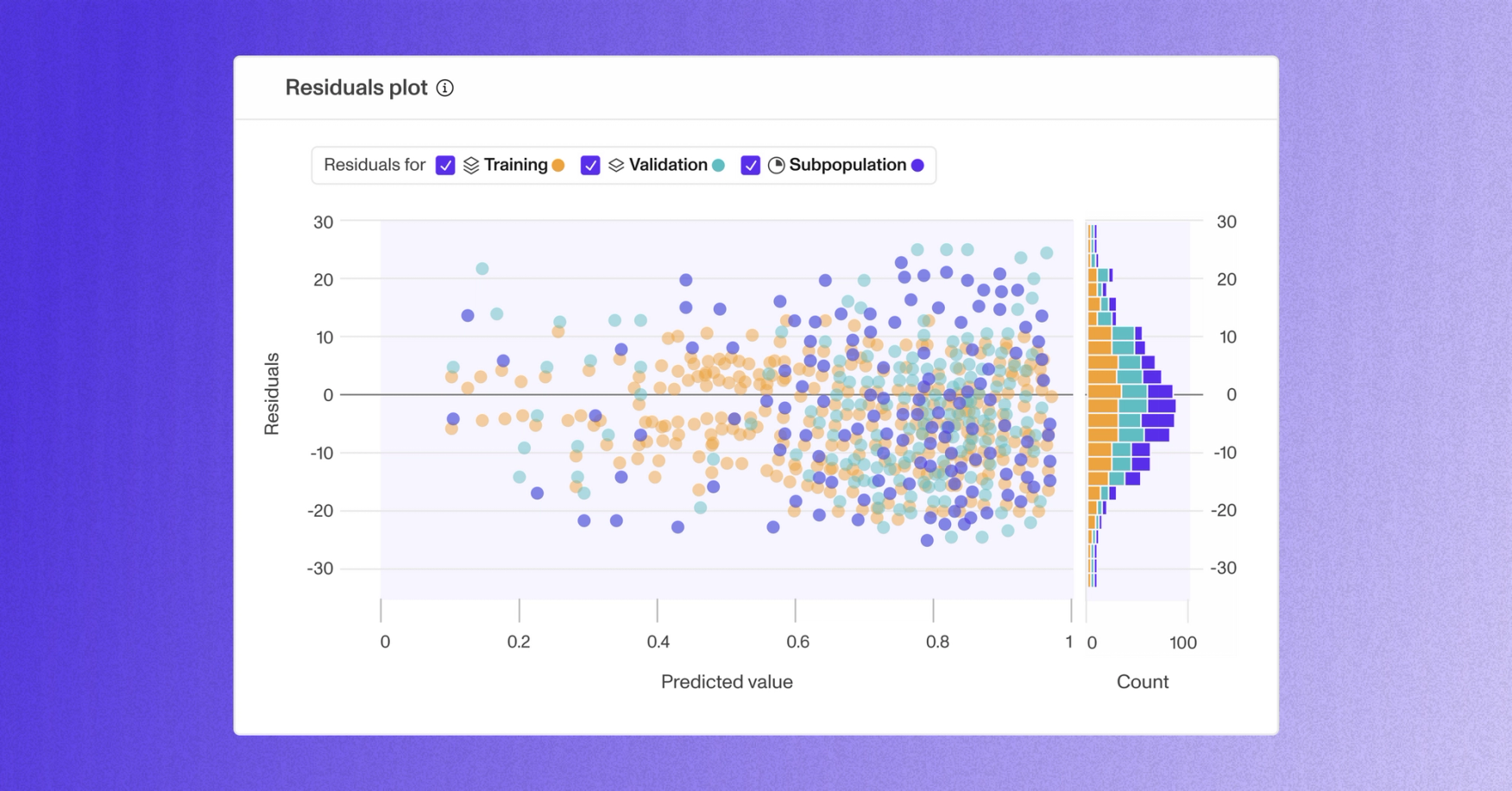

- •PlatformAdded column distribution graphs for LLM projects

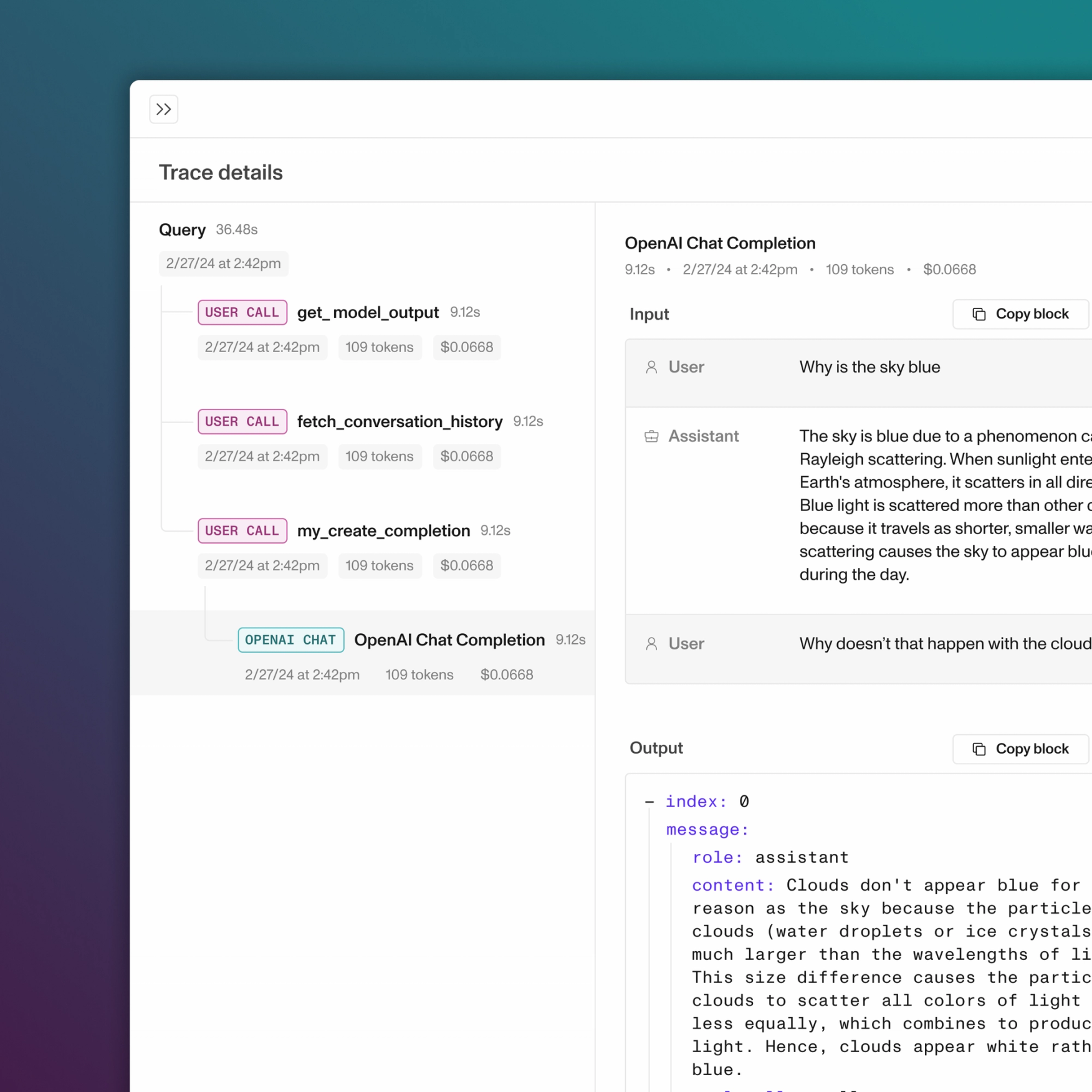

- •SDKsNew integration with Google ADK

- •PlatformAllow pausing test execution in Monitoring mode

- •CLIAdded command to export tests for a project

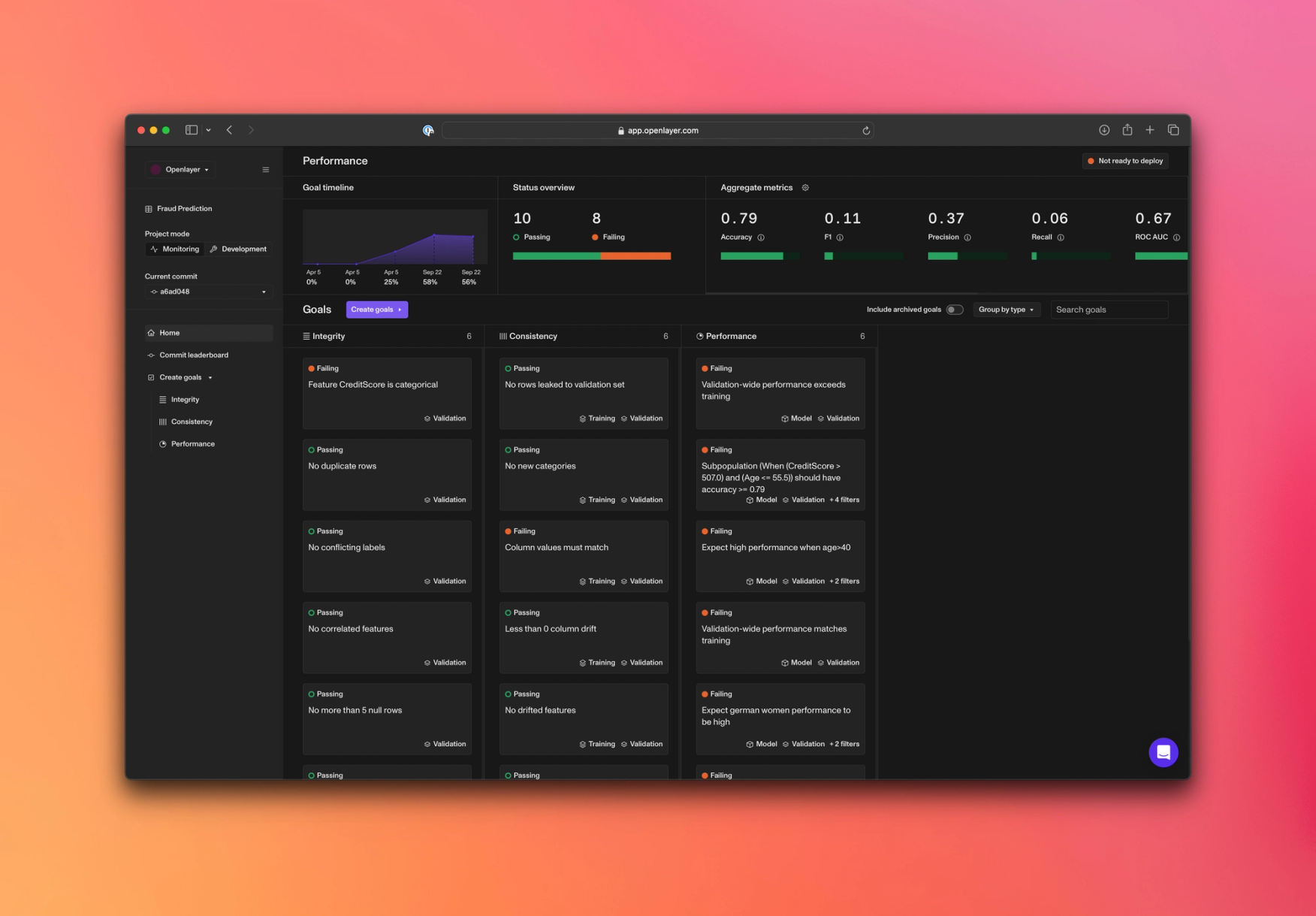

- •PlatformNew test to check for duplicate unique and primary keys

- •IntegrationsConnect to Snowflake views and run tests via remote execution

- •PlatformRun custom SQL tests joining multiple tables

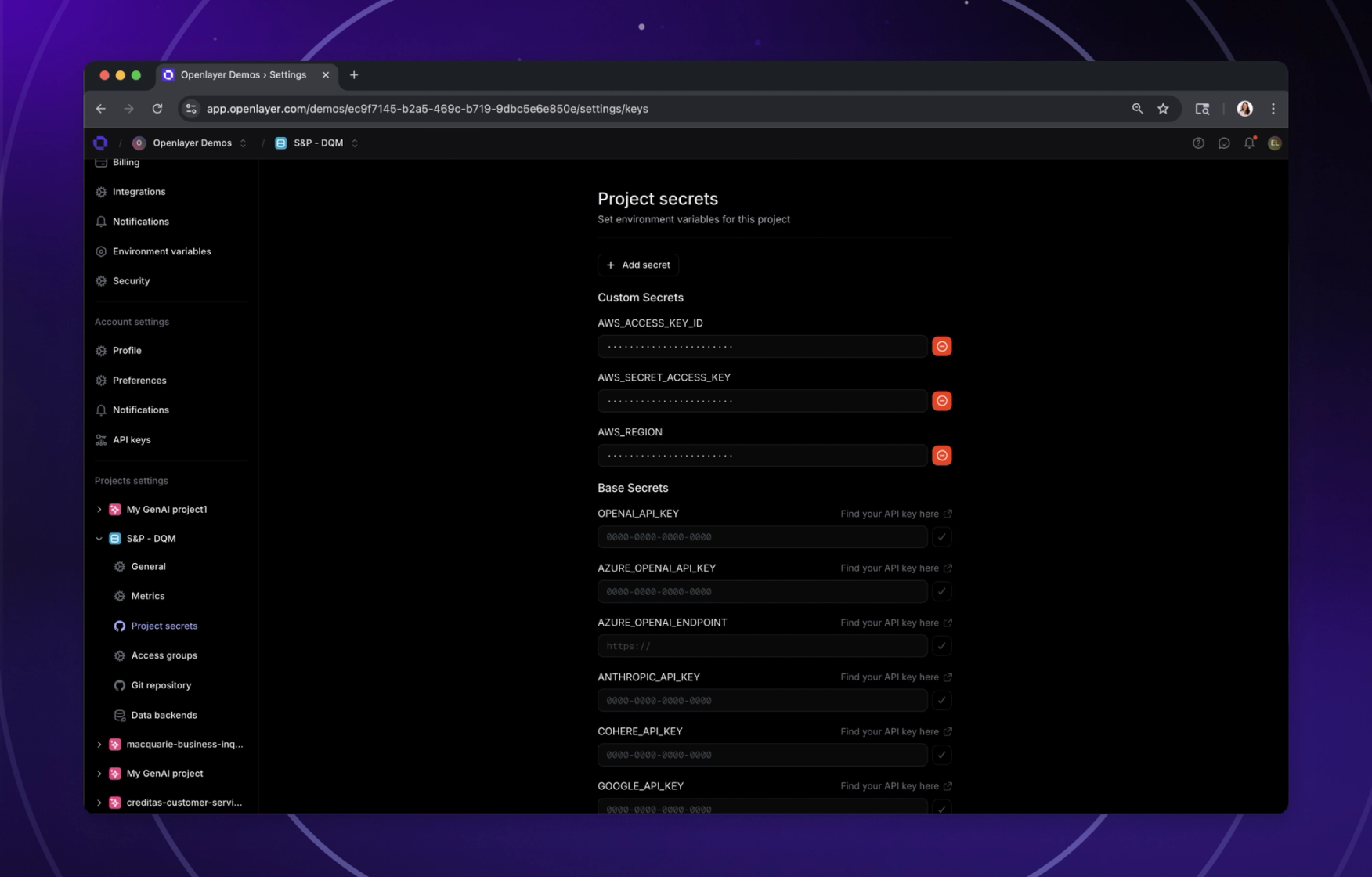

- •SecurityAllow specifying custom certs as environment variables to access external services

- •On-PremSupport Azure managed identity for storage connections

- •PlatformOpenlayer available on AWS, Azure, and Google Cloud marketplaces

Improvements

- •DocsNew section in docs describing how to manage environment variables

- •PlatformAllow defining specific columns to check for nulls in Null rows tests

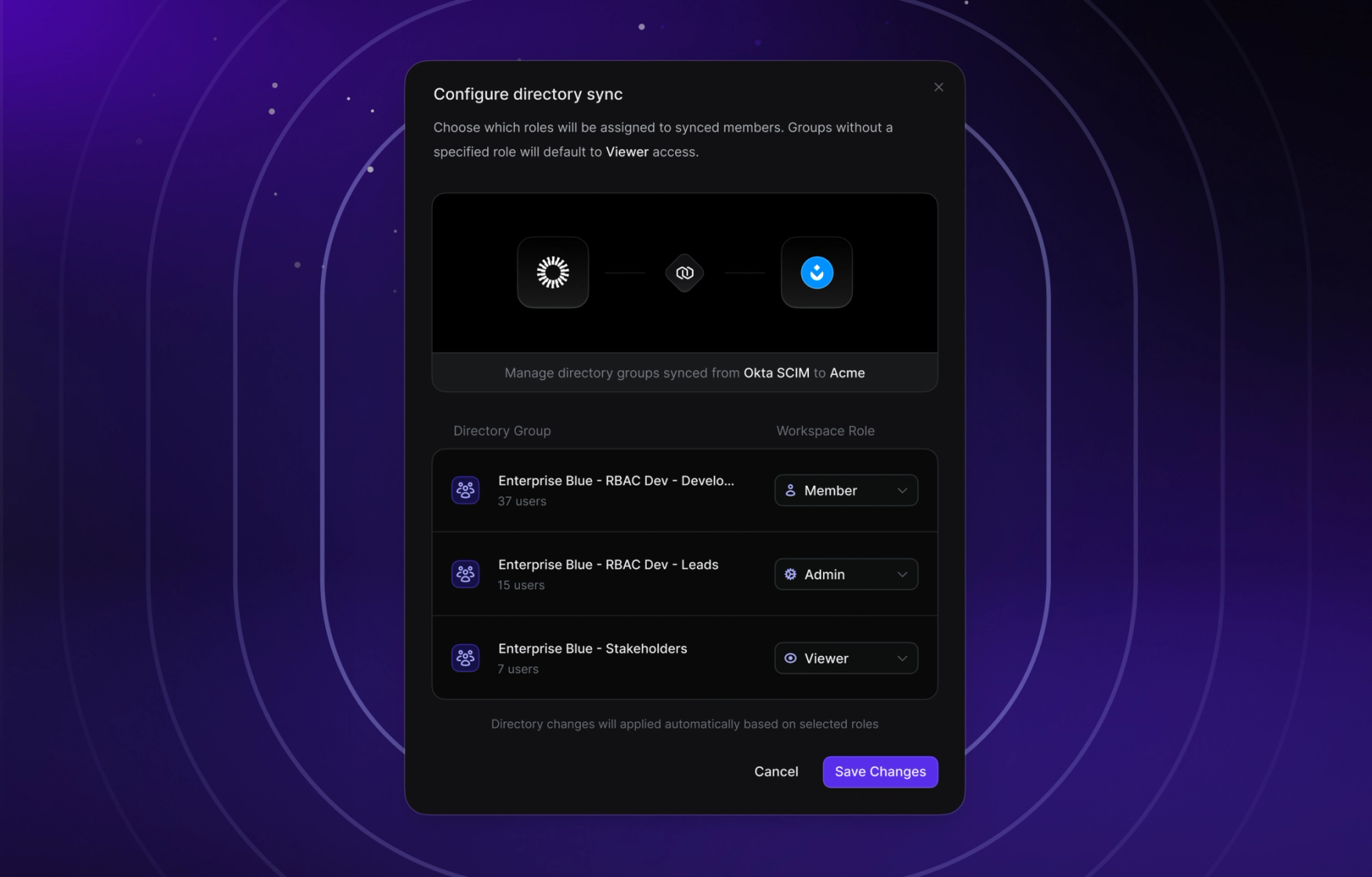

- •PlatformImproved Directory Sync Race Conditions Around Membership Creation

- •UI/UXImproved empty state for graphs throughout the app

- •UI/UXUI improvements to various components, including tags, multi-selects, and toggle button groups

- •UI/UXUI improvements to table display options

- •UI/UXImproved design of multi-select components

- •DocsImproved SAML docs page

- •PlatformAssign static IPs to Openlayer servers for easy allowlistin

Fixes

- •UI/UXRender higher decimal precision for test result values

- •PlatformHandled failing gracefully on non-pandas custom metrics

- •PlatformMissing insights for specific test results were erroring the data source

- •SecurityLock down SAML SSO logins when directory sync enabled

- •APIResolved preventing sending empty request body when creating a secret

- •On-PremFixed nginx image name

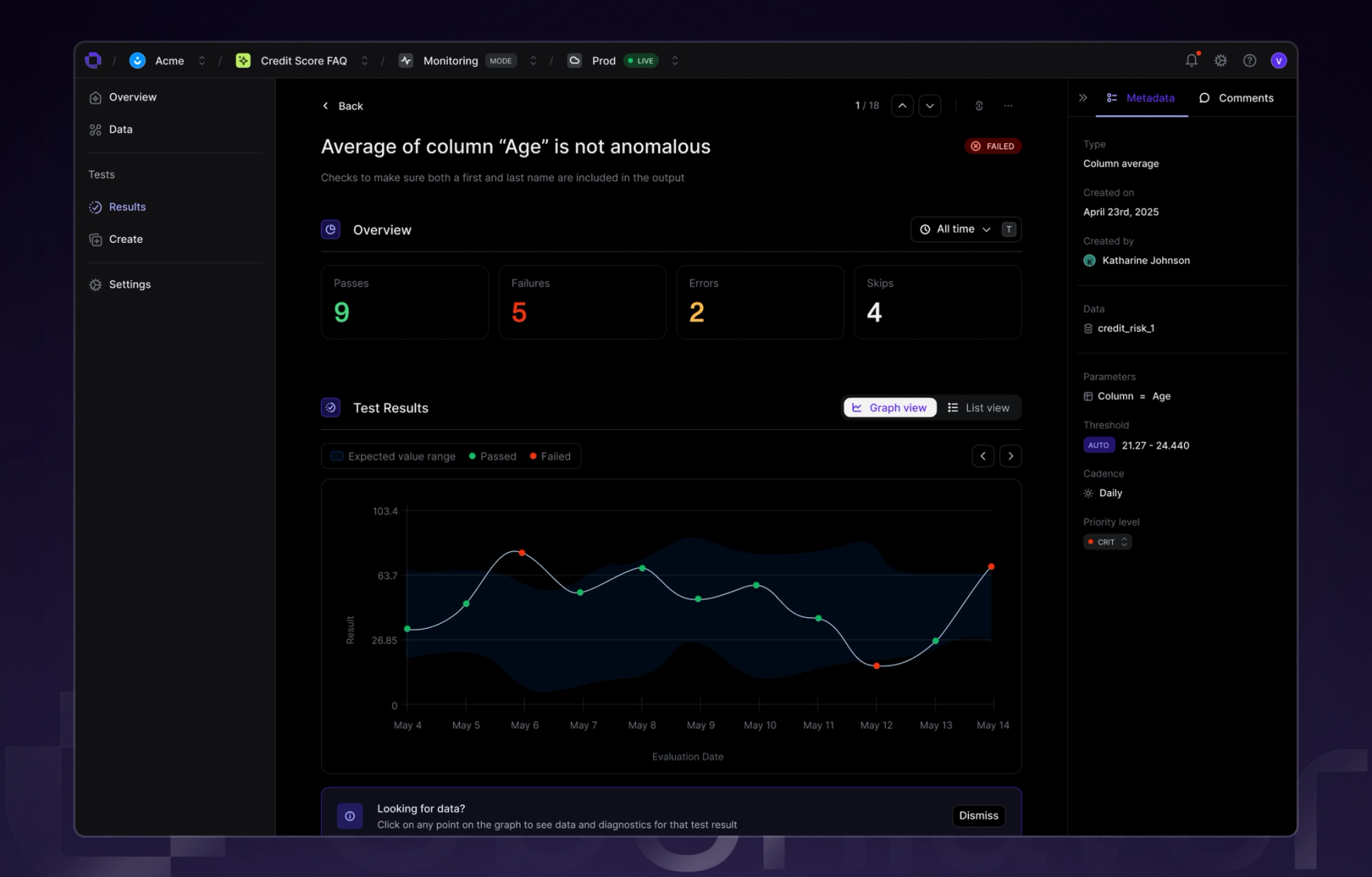

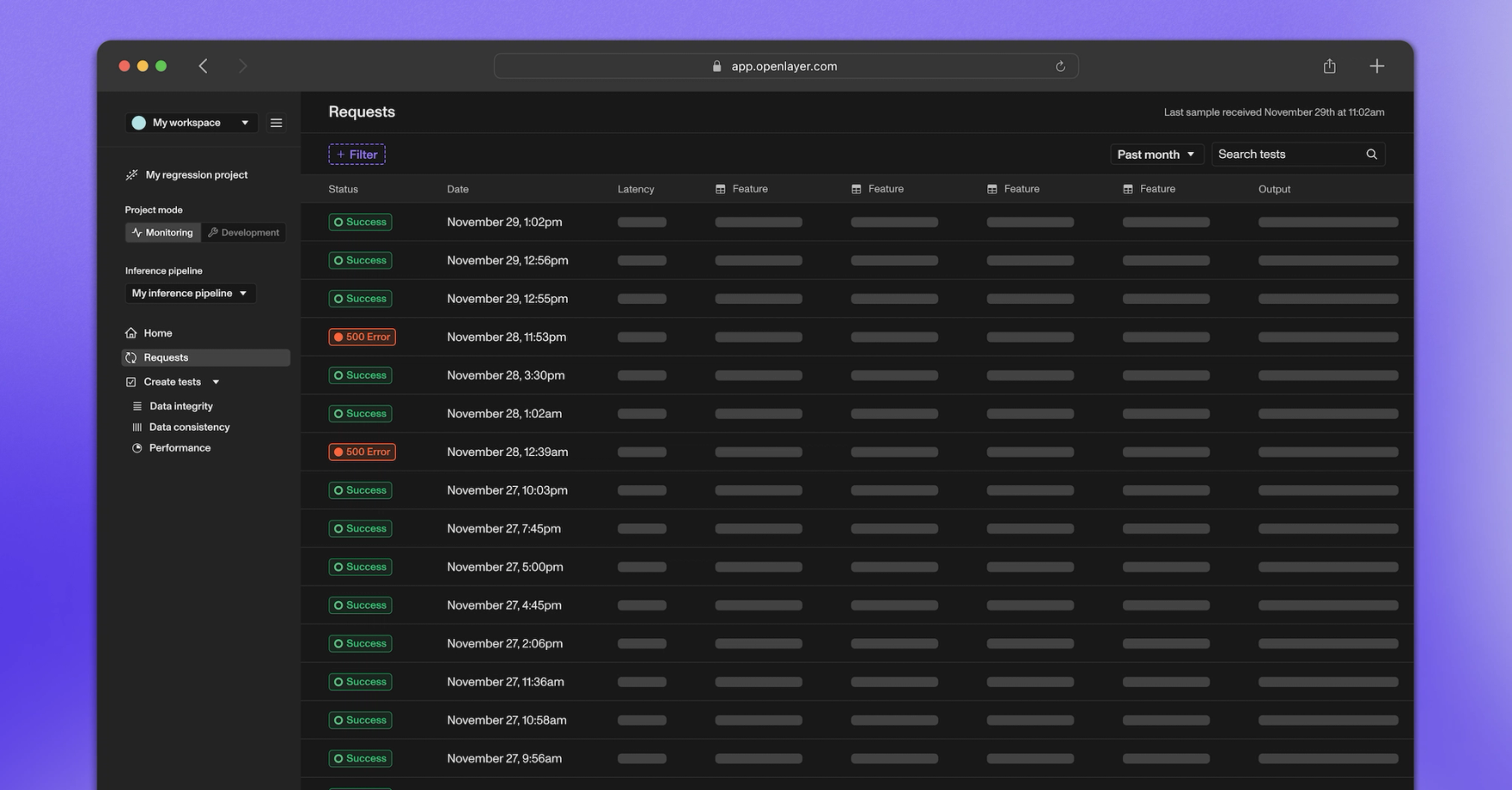

- •UI/UXPerformance improvements and fixes to monitoring test page that allow you to more easily view all historical test results

- •UI/UXResolved monitoring mode set up test not switching status correctly

- •UI/UXFixed project frameworks table showing frameworks outside selected project

- •UI/UXFixed test result details not rendering in development mode

- •APIResolved OTel endpoint errors

- •UI/UXResolved result chip rendering as unavailable in test page

- •PlatformResolved error retrieving production data metrics

- •IntegrationsResolved Slack integration errors

- •PlatformResolved SSO directory sync bugs

- •APIResolved pagination issue with listing orgs

- •UI/UXEnvironment variable naming consistency in UI

- •CLIResolved CLI profile login not overwriting existing profile

- •UI/UXResolved test result data table not stretching to height

- •IntegrationsResolved query hitting BigQuery's complexity limit

- •PlatformValidate timestamp column name exists in table

- •UI/UXResolved navigating to and back from or deleting a rule under framework navigation issues

- •PlatformSeveral minor API and UI bugs and improvements